Key Takeaways

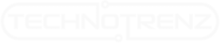

- Strategic Inference Focus: Microsoft unveiled Maia 200, a custom AI chip manufactured on TSMC’s 3-nanometer process, engineered for AI inference rather than training, delivering 10+ petaFLOPS at 4-bit precision (FP4).

- Competitive Performance Claims: Microsoft claims Maia 200 delivers 3x FP4 performance of Amazon Trainium 3 and FP8 performance exceeding Google’s TPU v7, while achieving 30% better performance per dollar than competing hardware systems.

- Data Center Deployment: Maia 200 chips are now live in Microsoft’s Iowa data center with expansion to Arizona planned, powering Microsoft 365 Copilot, Azure AI Foundry, and OpenAI’s GPT-5.2 models—signaling production-scale readiness.

- Software Ecosystem Challenge: Microsoft is bundling open-source tools (Triton, co-developed with OpenAI) to directly challenge Nvidia’s CUDA software dominance and reduce developer lock-in to Nvidia’s ecosystem.

Quick Recap

Microsoft announced on January 26, 2026, that it has launched Maia 200, a custom-built AI inference accelerator chip manufactured by TSMC on 3-nanometer process technology. The chip is now deployed in Microsoft’s Iowa data center and will expand to Arizona, powering Microsoft’s AI services and OpenAI’s models through Azure infrastructure. Microsoft positions Maia 200 as a breakthrough inference accelerator explicitly designed to reduce the company’s dependence on Nvidia GPUs for AI token generation and operational efficiency.

Maia 200 Architecture

Inference Optimization Over Training

Microsoft’s Maia 200 represents a deliberate architectural pivot toward inference-optimized silicon. Unlike Nvidia’s H200 GPU, which balances training and inference, Maia 200 is purpose-built for inference—where trained AI models generate real-time responses to user queries at scale.

The technical design reflects this specialization. Maia 200 is built on TSMC’s 3-nanometer process with over 140 billion transistors, delivering more than 10 petaFLOPS in 4-bit precision (FP4) and 5 petaFLOPS in 8-bit precision (FP8) within a 750-watt thermal envelope. The chip integrates 216GB of HBM3e memory running at 7 TB/s bandwidth plus 272MB of on-chip SRAM—a deliberate emphasis on fast, local memory access to reduce latency-sensitive inference bottlenecks.

The SRAM integration is architecturally significant. While Nvidia’s H200 maximizes HBM3e capacity for training workloads, Maia 200 trades off maximum memory for low-latency access—a design choice that mirrors competing inference specialists like Cerebras and Groq. This architecture directly addresses the economic reality of AI inference at scale: inference costs are now the dominant operational expense for cloud providers serving millions of concurrent users, and latency matters as much as throughput.

Microsoft validated Maia 200 performance through vendor benchmarks, comparing directly against competitors. Microsoft’s claims: 3x FP4 performance versus Amazon Trainium 3, FP8 performance exceeding Google TPU v7, and 30% better performance-per-dollar than competing hardware in Microsoft’s fleet. Notably, Microsoft acknowledges it will continue purchasing Nvidia GPUs for model training workloads—Maia 200 is purely a demand-side optimisation for inference, not a complete Nvidia replacement.

Competitive Comparison: Microsoft Maia 200 vs. Industry Alternatives

| Metric | Microsoft Maia 200 | Nvidia H200 | Google TPU v7 Ironwood |

| Process Technology | TSMC 3nm; 140B transistors | TSMC 4nm; 80B transistors | TSMC 3nm; undisclosed transistor count |

| Peak Performance (FP4/FP8) | 10+ PFLOPS (FP4); 5 PFLOPS (FP8) | Not FP4-native; optimized for FP16/BF16 | Not FP4-native; 4.6 PFLOPS FP8 |

| Memory Architecture | 216GB HBM3e (7 TB/s) + 272MB SRAM; 750W TDP | 141GB HBM3e (4.8 TB/s); 500W TDP | 192GB HBM (7.4 TB/s); est. 600-1000W TDP |

| Inference Performance (Tokens/sec, Llama 2 70B) | Undisclosed; vendor claims 3x FP4 vs Trainium3 | ~31,712 tokens/sec (MLPerf data) | No published MLPerf inference data |

| Cost Efficiency | 30% better perf/dollar vs. competing systems in Microsoft fleet | ~1.9x faster inference than H100; enterprise GPU pricing | Cloud pricing for TPU v7; no public per-chip list pricing |

| Target Workload | AI inference; Copilot, GPT-5.2 serving, synthetic data | Mixed training + inference; dominant for training | Both training and inference; TPU v5e focused on inference |

| Developer Ecosystem | Triton (open-source, OpenAI-backed); emerging adoption | CUDA (proprietary); 15+ years, 5M+ developers, dominant | TensorFlow/JAX; strong in Google Cloud but smaller than CUDA |

| Production Deployment | Iowa, Arizona data centers; Microsoft-only (no external sales) | Widely available; AWS, Azure, GCP, on-premise; >1000s deployed | Google Cloud only; limited external availability |

| Scaling Capability | Up to 6,144 chips per cluster with standard Ethernet | NVLink + InfiniBand for 72-GPU clusters; then Ethernet | ICI proprietary networking; scales to 9,216 chips per pod |

Strategic Analysis: Maia 200 wins decisively on inference-specific optimization and cost-per-inference-token for Microsoft’s internal workloads—vendor claims of 3x FP4 advantage over Trainium3 and 30% better perf-per-dollar reflect this focus. However, Nvidia H200 retains training versatility and developer ecosystem dominance with CUDA’s 15+ year head start; Maia 200’s Triton adoption remains nascent. Google TPU v7 competes strongly on FP8 performance (4.6 PFLOPS, comparable to Maia’s 5 PFLOPS) and massive scaling (9,216 chips), but remains cloud-only and lacks public inference benchmarks.

The competitive winner depends on workload: Maia 200 leads for inference-only cost optimization; H200 leads for mixed training/inference and ecosystem portability; TPU v7 leads for scale and Google’s proprietary workloads. None displaces the others—instead, they fragment the market.

The Hyperscaler AI Chip Arms Race Accelerates

Microsoft’s Maia 200 launch reflects a structural shift in cloud computing: the “Big Three” (Microsoft, Google, Amazon) are fragmenting their hardware stacks away from Nvidia’s unified ecosystem and toward proprietary, workload-specific silicon. This consolidation addresses two strategic pressures simultaneously.

First, cost management at scale. Nvidia’s market dominance enabled pricing power—H200 GPUs rent for $15,000-30,000 monthly via third-party brokers, creating intense margin pressure for cloud providers. Microsoft’s claim of 30% better perf-per-dollar with Maia 200 directly addresses this economics problem. If the claim holds, deploying Maia 200 for inference workloads could save Microsoft billions annually across its Azure AI services.

Second, software ecosystem lock-in. Nvidia’s CUDA platform is the de facto standard for AI development, but this centralization creates strategic vulnerability for cloud providers dependent on Nvidia’s continued innovation and licensing terms. Microsoft’s push of Triton (an open-source alternative to CUDA) with Maia 200 signals an effort to commoditize AI software development and reduce dependency on proprietary standards.

Competitive implications are significant. Amazon’s Trainium and AWS’s new Trainium3 follow similar inference-optimization strategies. Google’s TPU v7 emphasizes massive scaling and efficiency. Collectively, these custom chips are eroding Nvidia’s serviceable addressable market (SAM). However, Nvidia’s advantages persist: H200’s dominance in training (where the most compute-intensive work occurs), the depth of the CUDA ecosystem, developer comfort, and proven reliability. NVIDIA has signalled its own inference acceleration roadmap with the Vera Rubin chips, suggesting competitive dynamics will intensify.

Regulatory considerations also matter. NVIDIA faces export restrictions to China and growing antitrust scrutiny in Europe and the US. Custom silicon investments by hyperscalers may accelerate not from technical superiority alone but from regulatory risk mitigation—having alternative compute sources reduces geopolitical and competitive vulnerability.

TechnoTrenz’s Takeaway

I think this is a big deal because it shows that even Nvidia’s most powerful customers are now willing to invest billions in chip design just to reduce reliance on Nvidia—that’s an inflection point. In my experience covering semiconductor markets, this rarely happens. Nvidia’s CUDA ecosystem is so entrenched that custom silicon efforts typically fail or remain niche. But Microsoft, Google, and Amazon are simultaneously rolling out custom chips optimized for their own workloads. That’s not competition anymore—that’s fragmentation of what used to be Nvidia’s monopoly.

This is bearish for Nvidia’s long-term margins on inference chips, but not bearish for Nvidia overall. Training remains Nvidia’s fortress. The H200’s dominance in model development and fine-tuning is unmatched. The real risk isn’t that Maia 200 replaces H200s—it won’t. The risk is that inference workloads (which are the majority of production AI compute now) gradually migrate to custom silicon, shrinking Nvidia’s addressable market and reducing pricing power. That margin compression could happen over 2-4 years as Microsoft, Google, and Amazon deploy Maia, TPU v7, and Trainium3 at scale.

I’m watching three things. First, whether Microsoft’s 30% cost advantage claim holds in real-world production or turns out to be cherry-picked benchmarks. Custom silicon always looks good in lab tests. Second, whether Triton adoption actually scales—if developers stick with CUDA because it’s easier and more stable, Maia 200 becomes an internal-use chip with limited external TAM. Third, whether Nvidia successfully launches Vera Rubin and reasserts dominance in inference. If Vera Rubin outperforms Maia 200 by 20%+ and CUDA’s ecosystem advantage persists, hyperscalers may dial back custom chip investment.

For now, I’m cautiously bullish on Maia 200’s success within Microsoft’s ecosystem but cautious on industry-wide implications. Maia 200 will definitely reduce Microsoft’s Nvidia spending and improve Azure’s inference economics. That’s real and material. But displacing Nvidia entirely requires multiple things: sustained technology leadership (Maia 300, 400 generations must stay competitive), developer ecosystem growth for Triton, and continued hyperscaler conviction. If Nvidia executes well on Vera Rubin and keeps CUDA’s advantages, we end up with a bifurcated market: training on H200/B200, inference split between Maia/TPU/Trainium for internal use and H200/B200 for external cloud customers. That’s not Nvidia displacement—that’s Nvidia market fragmentation. Both are bad for Nvidia’s long-term valuation, but not catastrophic.